Beginner's Guide to Language Model Optimization (LMO)

As artificial intelligence becomes more embedded in modern applications, Language Models (LMs) like GPT, BERT, and LLaMA are powering everything from chatbots to translation tools. However, just deploying a language model is not enough — you need to optimize it for specific use cases. This is where Language Model Optimization (LMO) comes in.

If you're new to LMO, this beginner's guide will walk you through the basics, benefits, and best practices of optimizing a language model for your application.

🔍 What is Language Model Optimization (LMO)?

AI Generated image: What is Language Model Optimization (LMO)

Language Model Optimization refers to the process of tuning, adapting, or refining a pretrained language model so it performs better for a specific task, domain, or audience. While general-purpose models can do a lot, they may struggle with domain-specific vocabulary, tone, or formats — especially in medical, legal, or financial settings.

LMO helps align the model with your data, goals, and user expectations.

🚀 Why Optimize a Language Model?

AI Generated image: Why Optimize a Language Model?

- Improved Accuracy: Domain adaptation boosts response relevance and correctness.

- Reduced Bias: Optimization helps mitigate cultural, social, or regional biases.

- Efficiency Gains: A focused model can require less computation and memory.

- Better UX: Tailored outputs improve the user experience in real-world apps.

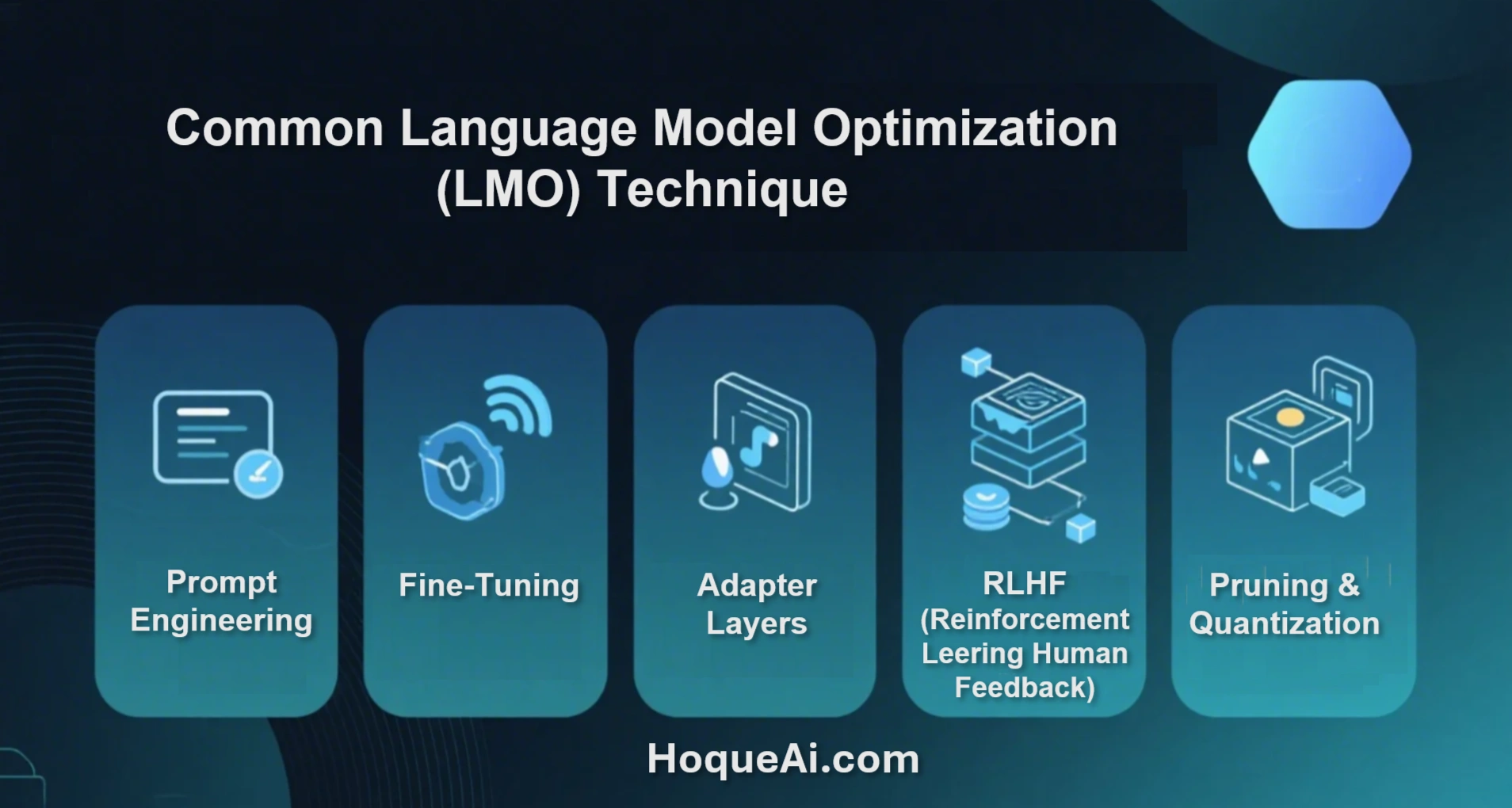

🧠 Common LMO Techniques

AI Generated image: Common Language Model Optimization Technique

- Prompt Engineering: Without changing the model, you can guide behavior using well-structured prompts. It’s simple but effective in many low-resource settings.

- Fine-Tuning This involves training the base model further using a domain-specific dataset. For example, you might fine-tune a GPT-style model with customer support chat logs to improve chatbot accuracy.

- Adapter Layers: Instead of fine-tuning the entire model, you add small modules (adapters) trained on your task-specific data. This is resource-efficient and modular.

- Reinforcement Learning from Human Feedback (RLHF): Popularized by OpenAI, RLHF optimizes the model using human preferences and evaluations. It's powerful for aligning language output with nuanced human expectations.

- Pruning and Quantization: These techniques reduce model size without major performance loss — helpful when deploying models on mobile or edge devices.

🛠 Tools and Frameworks for LMO

AI Generated image: Common Language Model Optimization Technique

- Hugging Face Transformers – Widely used for fine-tuning and prompt engineering.

- OpenAI Playground / API – Great for testing prompts and tuning behavior interactively.

- LoRA (Low-Rank Adaptation) – Efficient method for fine-tuning large models with limited compute.

- LangChain / LlamaIndex – Tools to structure language model pipelines with retrieval, memory, and chaining.

📚 Example: Optimizing for Legal Chatbot

Let’s say you’re building a chatbot to help users understand legal terms in employment contracts. A base model might confuse or simplify concepts. But with LMO, you could:

- Feed it a glossary of legal terms.

- Fine-tune it with Q&A data from legal forums.

- Test prompts to get more precise definitions.

- Use RLHF by asking lawyers to rate answers and guide training.

Result: A more reliable, trustworthy assistant.

✅ Best Practices for Beginners

- Start Simple – Use prompt engineering before jumping into fine-tuning.

- Use Clean Data – High-quality, relevant data is essential.

- Test Regularly – Run outputs by real users or domain experts.

- Monitor for Bias – LMs can unintentionally reflect harmful stereotypes.

- Document Everything – Record how you optimize and why.

🧩 Final Thoughts

Language Model Optimization is the bridge between powerful generic models and effective real-world applications. Whether you’re building a customer support agent, summarizing medical notes, or creating an educational tutor — LMO helps you get the most out of your AI.

As LMs continue to grow in size and capability, the skill of optimizing them will be one of the most valuable AI competencies. Start small, learn iteratively, and grow your impact.

www.HoqueAi.com

www.HoqueAi.com