AI Generated image: Detect spam emails using AI and Support Vector Machines (SVM)

This project explores the use of Support Vector Machines (SVM) to accurately classify emails as spam or not by leveraging natural language processing and text preprocessing techniques.

AI-Powered Spam Detection Using Support Vector Machines

AI Generated image: AI-Powered Spam Detection Using Support Vector Machines

In this project, we developed a machine learning model to classify email messages as spam or non-spam using a Support Vector Machine (SVM). The project involved preprocessing raw emails, extracting key features, and training a classifier to identify spam indicators effectively. The approach included tokenization, stemming, and mapping words to a predefined vocabulary before converting emails into feature vectors. The model was trained using a dataset of labeled emails and evaluated on a separate test set. The goal was to build a high-accuracy spam detection system that minimizes false positives while efficiently filtering unwanted emails.

We use the following Python libraries:

Python:

%matplotlib inline

import numpy as np

import matplotlib.pyplot as plt

import scipy.io #Used to load the OCTAVE *.mat files

from sklearn import svm #SVM software

import re #regular expression for e-mail processing

from stemming.porter2 import stem

import nltk, nltk.stem.porter

The email content is read from a text file and displayed as follows:

Python:

file_path = r'd:\mlprojects\data\emailSample1.txt' # Use raw string (r'...')

with open(file_path, 'r', encoding='utf-8') as file:

content = file.read()

print(content) # Display the contents

Output:

Anyone knows how much it costs to host a web portal? Well, it depends on how many visitors you're expecting. This can be anywhere from less than 10 bucks a month to a couple of $100. You should checkout http://www.rackspace.com/ or perhaps Amazon EC2. To unsubscribe yourself from this mailing list, send an email to: groupname-unsubscribe@egroups.com

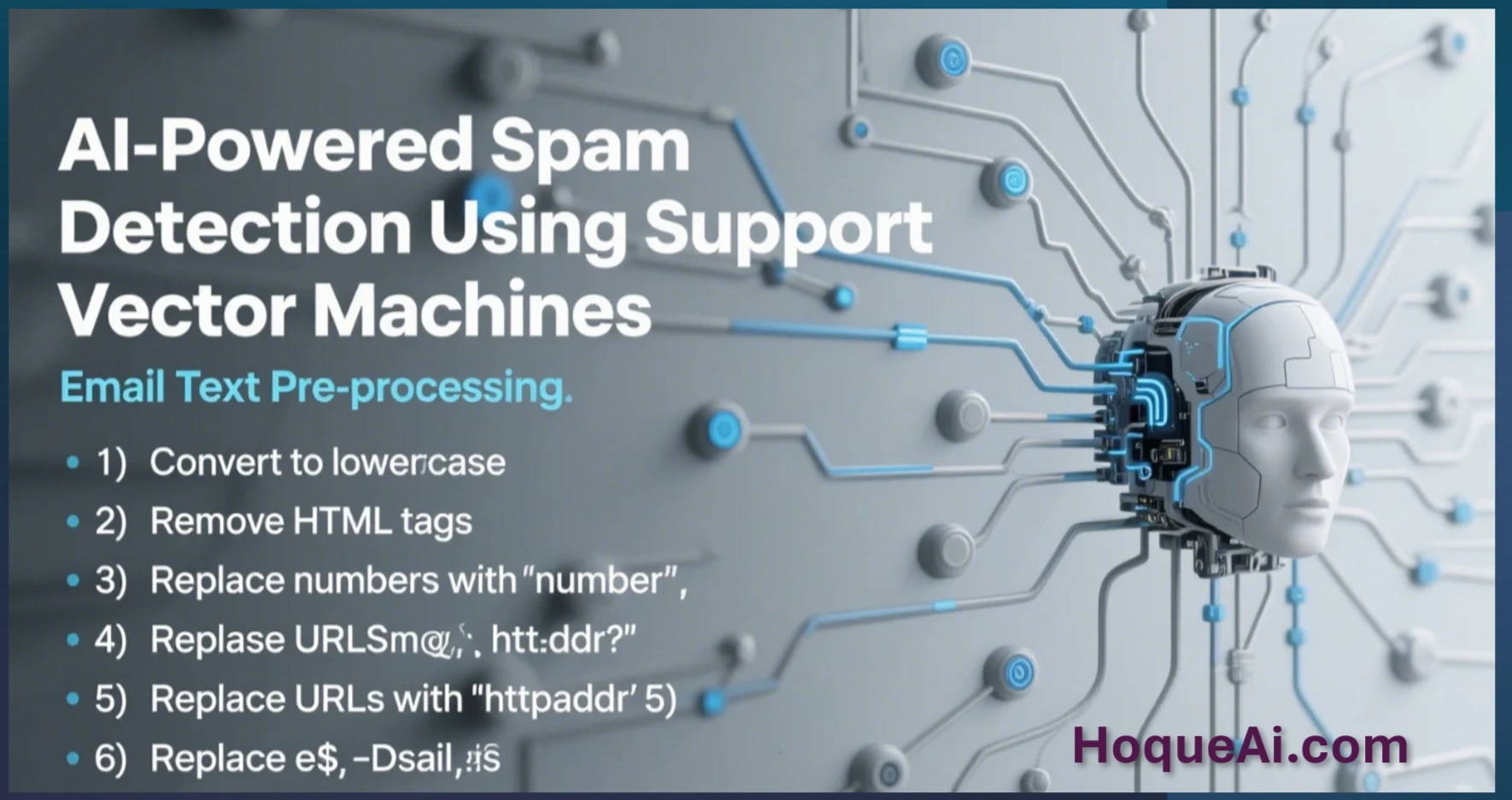

AI Generated image: Email Text Pre-processing

Before analyzing the text, we apply preprocessing steps:

def preProcess( email ):

email = email.lower() # Make the entire e-mail lower case

email = re.sub(r'<[^<>]+>', ' ', email) # remove HTML tags

email = re.sub(r'[0-9]+', 'number', email) # Convert numbers to strings

email = re.sub(r'(http|https)://[^\s]*', 'httpaddr', email) # Convert http to httpaddr

email = re.sub(r'[^\s]+@[^\s]+', 'emailaddr', email) # Strings with "@" --> 'emailaddr'

email = re.sub(r'[$]+', 'dollar', email) # '$' sign replaced with 'dollar'

return email

After preprocessing, the email is tokenized and stemmed.

def email2TokenList( raw_email ):

stemmer = nltk.stem.porter.PorterStemmer()

email = preProcess( raw_email )

tokens = re.split(r'[ \@\$\/\#\.\-\:\&\*\+\=\[\]\?\!\(\)\{\}\,\'\"\>\_\<\;\%]', email)

tokenlist = []

for token in tokens:

token = re.sub(r'[^a-zA-Z0-9]', '', token);

stemmed = stemmer.stem( token )

if not len(token): continue

tokenlist.append(stemmed)

return tokenlist

Example:

Original: "Running quickly! Buy $100 worth at Amazon.com"

Processed Tokens: ['run', 'quickli', 'buy', 'dollar', 'worth', 'amazon']

The function getVocabDict() loads a predefined vocabulary list where each word is assigned a unique index.

def getVocabDict():

vocab_dict = {}

with open("vocab.txt", "r") as file:

for line in file:

index, word = line.split()

vocab_dict[word] = int(index)

return vocab_dict

The function email2FeatureVector() processes an email and converts it into a binary feature vector.

def email2FeatureVector(email, vocab_dict):

feature_vector = np.zeros(len(vocab_dict))

words = email.split()

for word in words:

if word in vocab_dict:

feature_vector[vocab_dict[word] - 1] = 1

return feature_vector

The dataset consists of labeled spam and non-spam emails. We train an SVM with a linear kernel using a regularization parameter of C=0.1.

from sklearn.svm import SVC

classifier = SVC(C=0.1, kernel='linear')

classifier.fit(X_train, y_train)

After training, we evaluate the accuracy of the model on both training and test data:

train_accuracy = classifier.score(X_train, y_train) * 100

test_accuracy = classifier.score(X_test, y_test) * 100

print(f"Training Accuracy: {train_accuracy:.2f}%")

print(f"Test Accuracy: {test_accuracy:.2f}%")

AI Generated image: Words that are spam in email.

The trained SVM provides insight into which words contribute most to spam classification.

weights = classifier.coef_.flatten()

indices = np.argsort(weights)[-15:]

important_words = [word for word, index in vocab_dict.items() if index in indices]

print("Top spam-indicative words:", important_words)

After running our codes we got output as:

The 15 most important words to classify a spam e-mail are:

['otherwis', 'clearli', 'remot', 'gt', 'visa', 'base', 'doesn', 'wife', 'previous', 'player', 'mortgag',

'natur', 'll', 'futur', 'hot']

The 15 least important words to classify a spam e-mail are:

['http', 'toll', 'xp', 'ratio', 'august', 'unsubscrib', 'useless', 'numberth', 'round', 'linux', 'datapow',

'wrong', 'urgent', 'that', 'spam']

Spam containing "otherwis" = 804/1277 = 62.96%

NON spam containing "otherwis" = 301/2723 = 11.05%

The completion of this project resulted in a highly accurate spam classification model, achieving 99.83% training accuracy and 98.90% test accuracy using an SVM with a linear kernel. The system successfully identified key words and patterns associated with spam, demonstrating its effectiveness in filtering unwanted emails. Furthermore, the project provided valuable insights into natural language processing (NLP) techniques and feature engineering for text classification. Future enhancements could involve integrating deep learning techniques or improving feature extraction methods to adapt to evolving spam strategies.

I sincerely thank Prof. Andrew NG (DeepLearning.AI, Stanford University) for his inspiring courses that laid the foundation for this project.